Week 6

In Audio Arts this week we spent time in Studio 1 first reviewing how to route signal into Pro Tools. We also looked at monitoring your sound through the monitor speakers, headphones using the headphone amp, and also creating a headphone mix for recording musicians. We also sent signal through all the different rooms as a complete loop, i.e. Studio 2, Dead Room, EMU Space and Studio 1. This was very interesting and I realized that the studio setup was a lot more flexible than I originally thought. [1]

In Creative Computing we spent the whole lecture looking at the two pieces of software SPEAR and SoundHack. SPEAR we had looked at before and I have spent a little time playing around. I think this software has the potential to allow for some creative outcomes. SoundHack has some stranger features, which I will explore in due course. The ability to play any files as audio (including non-sound files) is strange and not something I have seen ever before. [2]

In Forum we listened to a number of pieces. Once again David Harris had a variety of alternative music. The first was by Edgard Varese, called Ecuatorial (1934).  ('Edgard Varese', Wikipedia. Accessed 10/04/2006). This piece consisted of bass, 4 trumpets, 4 trombones, piano, organ, 2 Ondes Martenot, and 5 percussion instruments. The strings were very full in this piece and were an essential part of the overall sound. There was a deep male voice which gave the piece an oprah feel. Overall I felt the piece had a dramatic, primal feel to it. I enjoyed this piece and thought it allowed for experimentation but still held a strong musical sense. [3]

('Edgard Varese', Wikipedia. Accessed 10/04/2006). This piece consisted of bass, 4 trumpets, 4 trombones, piano, organ, 2 Ondes Martenot, and 5 percussion instruments. The strings were very full in this piece and were an essential part of the overall sound. There was a deep male voice which gave the piece an oprah feel. Overall I felt the piece had a dramatic, primal feel to it. I enjoyed this piece and thought it allowed for experimentation but still held a strong musical sense. [3]

The next piece was by Milton Babbit: Ensemble for Synthesis.  This piece was a 12-tone piece, but to me sounded more like a bunch of random sounds. But, I give Babbit some credit because the rhythm was also in the 12-tone technique i.e. each note had to be a different rhythmic value before any one rhythm was repeated. I could hear how each note was a different pitch but it was hard to tell if each rhythm value was different. Personally I don'’t see how you can tell because there is no timing. Or if there is timing it'’s virtually impossible to tell. I didn'’t really like this piece and it didn'’t sound like music to my ears. [3]

This piece was a 12-tone piece, but to me sounded more like a bunch of random sounds. But, I give Babbit some credit because the rhythm was also in the 12-tone technique i.e. each note had to be a different rhythmic value before any one rhythm was repeated. I could hear how each note was a different pitch but it was hard to tell if each rhythm value was different. Personally I don'’t see how you can tell because there is no timing. Or if there is timing it'’s virtually impossible to tell. I didn'’t really like this piece and it didn'’t sound like music to my ears. [3]

The next piece was by Barry Truax: Wings of Nike I Album. This was just one continuous sound played throughout the whole piece. I was waiting for something to happen then the song finished. I thought this piece was uninspiring. [3]

The second half of forum we spent the whole hour discussing what is music technology exactly. I found this to be quite interesting and it was really good to hear points of view not only from lecturers but also from students from different backgrounds. I think the overall conclusion from the session is that music technology is a hybrid of different disciplines. I do agree with this because music technology is a new and growing field. It was evolved from technology and is used to deal with music. Music has always been around but technology is new so therefore it is a hybrid and will continue to adopt new ideas and innovations as technology grows. [4]

[1] Christian Haines. "Audio Arts Lecture - Studio 1, Studio 2, Dead Room, EMU Space". Lecture presented at the Electronic Music Unit, University of Adelaide, 4th April 2006.

[2] Christian Haines. "Creative Computing Lecture - Audio Lab". Lecture presented at the Audio Lab, University of Adelaide, 6th April 2006.

[3] David Harris. "Music Technology Forum Lecture - EMU Space". Lecture presented in the EMU Space, Electroninc Music Unit, University of Adelaide, 6th April 2006.

[4] Stephen Whittington, Mark Carroll, Tristan Louth-Robbins. "What is Music Technology?". Presented at EMU Space, Electronic Music Unit, University of Adelaide, 6th April 2006.

Monday, April 10, 2006

Monday, April 03, 2006

Week 5

Today in Audio Arts we spent time in Studio 1 for the first time. This studio had an awesome control surface to control Pro Tools, the Control 24.  [1] This has 24 motorised faders and is fully automatable to all of Pro Tools plugins and faders in the mixer window. I think the surround sound setup would be great to use and I look forward to learning how to mix in 5.1 surround. Studio 1 has a different setup to Studio 2 because you have to patch in the speakers to hear any sound. Hopefully this doesn’t cause problems for me when I start using the studio. [2]

[1] This has 24 motorised faders and is fully automatable to all of Pro Tools plugins and faders in the mixer window. I think the surround sound setup would be great to use and I look forward to learning how to mix in 5.1 surround. Studio 1 has a different setup to Studio 2 because you have to patch in the speakers to hear any sound. Hopefully this doesn’t cause problems for me when I start using the studio. [2]

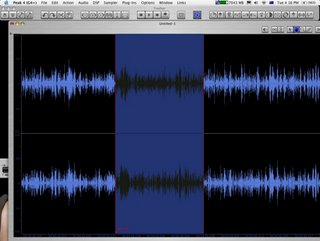

In Creative Computing we spent more time on audio theory. The focus was more on organisational tools such as markers, regions, and loop points. We also talked about how these markers, regions, and loop points can then be imported into a sequencer such as Pro Tools or Cubase. I am going to test if these can also be imported in Propellerhead’s Reason and Apple’s Logic Pro, as I use these programs all the time. The emphasis was on reducing time consumption.

[3] I have used Peak a fair bit so knowing how to create markers, regions and loops wasn’t anything new. However, I noticed Christian using some keyboard short-cuts which I didn’t know about. I am definitely going to learn these as they will make my sound editing a lot quicker in the future.

We brushed through some of the effects and paid particular attention to up-sampling and down-sampling. I have used sample rate conversion before but didn’t know that it was called up-sampling or down-sampling. Towards the end of the class we looked at the software SPEAR. This was the first time I had seen any kind of non-linear audio editor. The features of this program were new to me and I found it really interesting how you have the ability to edit sound as a “palette of frequencies”. This software is definitely going to consume many hours of experimentation for me.[4]

During our Forum session David Harris played us some music by John Cage.  [5]These were “Music for Carillon (1954)” and “Williams Mix (1952)”. These pieces were both long, repetitive, pieces, which I didn’t like at all. The second piece took 9 months to make as it was created by splicing many different recordings of tape. The final result was not worth the effort. I did think that there were some interesting sounds from the piece but on the whole it sounded like a bunch of random sounds instead of a musical composition. I see John Cage as a sound design artist more so than a composer. [6]

[5]These were “Music for Carillon (1954)” and “Williams Mix (1952)”. These pieces were both long, repetitive, pieces, which I didn’t like at all. The second piece took 9 months to make as it was created by splicing many different recordings of tape. The final result was not worth the effort. I did think that there were some interesting sounds from the piece but on the whole it sounded like a bunch of random sounds instead of a musical composition. I see John Cage as a sound design artist more so than a composer. [6]

Chris Williams was the guest speaker for this week. Chris spoke about his experience in film working in post-production such as sound design and sound effects. He also works as a sound engineer. He showed us some work that he had done in Pro Tools, i.e. a full 25 minute session file of music and sounds for a movie. This forum was especially interesting for me because one of my eventual goals is to work in film when I finish my studies. [7]

[1] Control 24. 'Integrated front end for Pro Tools TDM and LE systems', Digidesign. http://www.digidesign.com/products/control/control24/ (Accessed 3/04/2006)

[2] Christian Haines. 'Audio Arts - Studio 1 & EMU Space'. Lecture presented at Electronic Music Unit, University of Adelaide 28th March 2006.

[3] Peak screenshot, created on 3rd April 2006

[4] Christian Haines. 'Creative Computing - Audio Lab'. Lecture presented at Electronic Music Unit, University of Adelaide. 30th March 2006

[5] John Cage. 'John Milton Cage', Wikipedia. http://en.wikipedia.org/wiki/John_Cage (Accessed 3/04/2006)

[6] David Harris. 'Music Technology Forum Lecture - EMU Space'. Lecture presented in the EMU Space, Electroninc Music Unit, University of Adelaide, 30th March 2006.

[7] Chris Williams. 'Music Technology Forum Lecture - EMU Space'. Lecture presented in the EMU Space, Electroninc Music Unit, University of Adelaide, 30th March 2006.