Week 10 Forum – Scratching

I was very impressed with today’s forum. Something I can really relate to. I appreciate the fact that Stephen is open to the idea of DJing as an artform even though in the traditional scence it isn’t exactly the most scholarly form of music.

Many of the artists we watched in the film today I had heard of through my own interest of looking up the roots of DJing. DJs Qbert, Shadow, Mix-Master Mike are all legends in the art of scratching. Grand Master Flash, Kool Herc and Grand Wizard Theodore are of course the pioneers in this artform.

One part of the film I could really relate to was when the DJs talked about digging for vinyl. One of the biggest stigmas in the DJ industry now is the rise of digital downloading. The culture of spending hours plowing through crates of records has been lost with simply clicking on a computer. As a DJ I hate playing mp3s and will always remain a vinyl purist.

[2]

anyone can learn to scratch. . .

[1] Stephen Whittington, "Music Technology Forum: Semester 1 - Week 10 – Scratch". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 22nd May 2008

[2] WP Remix, Cats Learning How to be a DJ. http://iwdj.com/ (Accessed 23/5/8)

Monday, May 26, 2008

CC3 Week 10 - Streams (III)

I created a new synthdef for this weeks exercise. I've used brown noise as my sound source. I am using a filter to change the sound. Using a pbind I am varying the start and end times of the frequency sweep. I am also varying the Q.

Click here for my audio example [252KB].

(

SynthDef("BrownNoise", {

arg cFreq = 500, cAmp = 0.5, mFreq = 3, mAmp = 0.75, start = 1000, end = 8000, time = 10, width = 0.001;

var carrier, modulator, envStruct, envGen, filter, out;

modulator = SinOsc.ar(freq: mFreq, mul: mAmp);

carrier = BrownNoise.ar(1);

// Envelope Structure

envStruct = Env(

levels: [1.0, 0.35, 0.6, 0],

times: [1, 2, 3],

curve: 'welch'

);

// Envelope Instance

envGen = EnvGen.kr(

envelope: envStruct,

gate: 1.0,

doneAction: 2.0

);

filter = Resonz.ar(carrier, XLine.kr(start, end, time), width) * envGen;

out = Out.ar(bus: 0, channelsArray: [filter, filter]);

}).store;

)

Synth("BrownNoise", [ \start, 300, \end, 1000, \time, 3, \width, 0.1 ]);

SynthDescLib.read

(

Pbind(

\instrument, "BrownNoise",

\width, Pseq(#[0.001, 0.05, 0.01, 0.5, 0.75, 1], 1),

\start, Pseq(#[100, 500, 1000, 2000, 5000, 10000], 1),

\end, Pseq(#[300, 1000, 5000, 8000, 2000, 500], 1)

).play;

)

[1] Christian Haines. "Creative Computing: Semester 1 - Week 10 - Streams III". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 8th May 2008

CC3 Week 9 - Streams (II)

For this week's patch I used the synthdef I made in week 7. I have used the Pshuf to generate a sequence to control the speed, modulator frequency and modulator amplitude of the sample. I found this weeks exercise fairly straight forward since the ideas were really just the same as last week.

Click here for my audio example [464KB].

// Here is the sample for this patch.

b = Buffer.read(s, "sounds/Producers @ The Electric Light Hotel.wav");

(

SynthDef("BufferPlay", {

arg speed = 1, amp = 1, pan = 0, mFreq, mAmp;

var sig, pan2, envStruct, envGen, mod, car, out;

sig = PlayBuf.ar(1, b.bufnum, BufRateScale.kr(b.bufnum) * speed, loop: 1.0);

pan2 = Pan2.ar(sig, pan);

mod = SinOsc.ar(freq: mFreq, mul: mAmp);

// Envelope Structure

envStruct = Env(

levels: [0, 1, 0.5, 0.3, 0],

times: [2.0, 1.0, 3.0, 10.0],

curve: 'lin'

).plot;

// Envelope Instance

envGen = EnvGen.kr(

envelope: envStruct,

gate: 1.0,

doneAction: 2.0

);

Out.ar(bus: 0, channelsArray: amp * pan2 * mod* envGen);

}).send(s);

)

a = Synth("BufferPlay", [\speed, 1, \pan, -1, \mFreq, 1, \mAmp, 1]);

(

var a, b, c, d;

a = Pshuf.new(#[1, 1.5, 2, 2.5], 3).asStream;

b = Pshuf.new(#[1, 2, 3, 4], 3).asStream;

c = Pshuf.new(#[0.25, 0.5, 0.75, 1], 3).asStream;

d = Pshuf.new(#[0.25, 0.5, 0.75, 1], 3).asStream;

Task({

12.do({

Synth("BufferPlay", [ \speed, a.next, \mFreq, b.next, \mAmp, c.next ]);

d.next.wait;

});

}).play

)

[1] Christian Haines. "Creative Computing: Semester 1 - Week 9 - Streams II". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 1st May 2008

Week 9 Forum – Student Presentations

Today we had presentations from Seb Tomzak and Darrin Curtis. Seb presented us with his instrument he built using lasers through water. This idea is quite interesting and I think has a lot of potential commercially provided Seb can make the water enclosed so it is safe to use. Also it would be good if he can get rid of the computer which he did say he was working on.

Seb also talked about Milkcrate, a musical idea he has been a part of for a few years now. I was very impressed by the piece Seb showed us where he hit a milkcrate once and then created an entire piece based on that one recorded sound.

Darrin Curtis has been researching music therapy. I thought his ideas on healing with music was very interesting and it is something I have looked into doing in past years. I thought the idea of generating music through crystals was original and the beating frequencies that were generated from his piece was very soothing and peaceful to listen to. Impressive work.

[1] Seb Tomzak & Darrin Curtis, "Music Technology Forum: Semester 1 - Week 9 – Student Presentations ". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 15th May 2008

AA3 Weeks 8 & 9 – Live Sound & Acoustics

What do you think the purpose of live recording is? Consider the advantages and disadvantages of this approach.

A live recording is very different to a studio recording in many ways. There is of course the obvious difference that a live recording is a recording of a live performance. Yes, a studio recording is also recording live musicians but rather than recording a band on a stage outside they’re sitting in a little booth in a treated room.

When you’re going to see a live act, what is the appeal to go see them live instead of sitting at home listening to the band on a CD? I think if you ask yourself this question the answer is very similar as to the difference between a live verses studio recording.

This is my answer: It’s just the vibe mate.

A live performance usually consists of a massive arena, thousands of screaming fans, a stage full of speakers and instruments. The band has a huge adrenalin rush and is overwhelmed by the huge crowd.

When they start performing their music, that is exactly what they’re doing. Performing, and this isn’t just musically. They are engaging the crowd and creating a vibe. A live recording captures the energy of the performer at that time. There may even be some bum notes in a guitar solo but if the recording is capturing an amazing atmosphere or mood then that’s a positive outcome.

The purpose of a studio recording is to capture the best take. The sound is supposable perfect, and whilst it does sound good it is just a different sound to a live recording. I’m not saying a studio recording lacks energy. I am just making a comparison between two different recording environments.

A studio is generally a nice, quiet atmosphere. You generally perform in a acoustically treated room that is very quiet. You can call on a cup of coffee whenever you feel like it. You generally can’t smoke or take drugs.

Now compare this setting to a mass of screaming, drunken fans. Smoking and drinking on stage. Blaring guitars cranked to the max and thunderous drums. Ear-piercing vocals in you’re face.

The band could be playing the exact same song but it will sound noticeably different. This is because a musician’s frame of mind is expressed through their performance and when you feel different, you play different.

Of course a jazz or classical performance may not have the same effect in terms of performers’ emotion state. But, there will always be that difference.

The advantages of a live recording is that you really do capture that performance. It is a real performance at that point in time. It may not be a perfect performance but at the end of the day no one performance ever is. Provided you have recorded it correctly, it will sound more real.

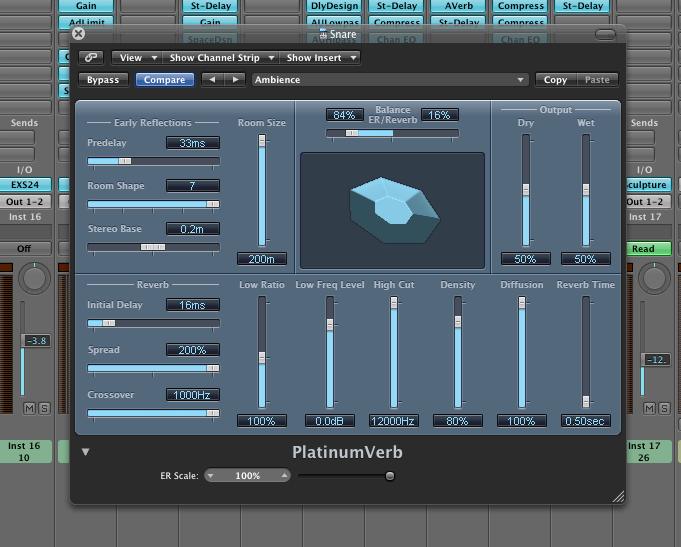

The disadvantages of a live recording is that it lakes the cleanness of a studio environment. A guitar solo in a studio environment could consist of a manifestation of many takes to make it sound perfect. It will be colored using effects to give it the perfect amount of delay or reverb. A vocal line could have warmth added to it or some pitch correction. Whatever the effect is, there are no limitations to how you can work with audio in a studio.

A studio recording is aimed at making a professional piece of music so that it can be commercially successful. It will always sound right if the engineer knows what he’s doing.

Where do you think the future of studio and venue acoustics lies? Consider the ideas of physical studio acoustics and virtual studio acoustics.

The future of acoustics lies in the growth of technology. We are at the point now where pretty much anything is possible in terms of music technology. I really don’t think there is much more we can learn about acoustics.

We can already measure wavelengths of sound sources and study the interaction of those waveforms in different surfaces. We already know how different surfaces interact with various waves types. We can calculate standing waves in any recording space and correct these problems. We know about resonance modes. We already know about bass traps, baffles, helmholtz resonators and many other forms of acoustic treatment. I think as technology grows we will be just better at measuring these principles.

It may be condescending to say there will definatly be no more advances. But when you consider the technology available to us now I just can’t think of where there is left to know. Perhaps in the future there will be better substances to use for acoustically treating room (e.g. instead of rockwall). But we do have better substances now, they just cost more.

The way I see it, the way in which we treat acoustics will just become easier and more convenient. That has been the trend for many technological advances.

In terms of software, I feel that software will continue to improve. Convolution reverbs will get better over time and continue to sound more real. I don’t know if it will ever sound as real as actual reverb but we are at the point now where it is pretty close.

Acoustic emulation in software can be very pleasing and is defiantly more convenient than using actual reverb. It is, of course, much cheaper too. Where I see the real advantages and advances is where reverbs in general are able to create an environment that is either impossible to record or doesn’t exist.

What does it sound like to sit in a room with 15 walls? Who knows. But, with the right algorithm you can generate the correct pre-delays & reverb times to replicate this environment. How much will it cost to build this? It’s not even worth considering.

don't you just love technology!

[1] David Grice. "Audio Arts: Semester 1 - Week 8/9 - Live Sound & Acoustics". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 16th May 2008

[2] "KRK Systems Releases Ergo, Powerful Room Audio Correction System". 2008. http://www.krksys.com/news_pr011708.php (Accessed 22nd May 2008)

[3] Multimedia, IK. "Advanced Room Correction System - Chapter 1 - Arc Overview". 2008. http://www.ikmultimedia.com/arc/download/ARC-1.0-UsrMan-CD.pdf (Accessed 22nd May 2008)

[4] Stavrou, Michael Paul. "Stav's Word: Recording a Live Gig." Audio Technology.45: 64 - 66

CC3 Week 8 - Streams (I)

I used my synthdef from week 6 in this week's exercise. I have generated a very basic sequence using Pseq. This week I really just tried to get my sequence to work. In later weeks I want to experiment further and create more exciting sounds. Click here for my audio example [12KB].

(

SynthDef("SinTone", {

arg cFreq = 500, cAmp = 0.5, mFreq = 3, mAmp = 0.75;

var carrier, modulator, envStruct, envGen, out;

modulator = SinOsc.ar(freq: mFreq, mul: mAmp);

carrier = SinOsc.ar(freq: cFreq, mul: modulator * cAmp);

// Envelope Structure

envStruct = Env(

levels: [1.0, 0.35, 0.6],

times: [0.1, 0.2],

curve: 'welch'

);

// Envelope Instance

envGen = EnvGen.kr(

envelope: envStruct,

gate: 1.0,

doneAction: 2.0

);

out = Out.ar(bus: 0, channelsArray: carrier);

}).store;

)

(

var a, b;

a = Pseq(#[60, 61, 63, 65, 67, 63], inf ).asStream.midicps;

b = Pseq(#[0.3, 0.4, 0.5, 0.6, 0.7, 0.8], 5).asStream;

Task({

12.do({

Synth("SinTone", [ \cFreq, a.next, \dur, b.next ]);

b.next.wait;

});

}).play

)

[1] Christian Haines. "Creative Computing: Semester 1 - Week 8 - Streams I". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 24th April 2008

Week 8 Forum – Peter Dowdall Presentation

This week’s forum without a doubt was the best forum I’ve attended in my years at this university. It was great to listen to someone’s life experience in the industry and his honest opinions on working with commercial artists.

Peter Dowdall had worked with many artists including Britney Spears. It was interesting to see how even the most professional artists are not conscience about such important recording practices. I’m talking about his example of inserting an mp3 region in a ProTools session. That did surprise me quite a bit.

Dowdall’s career is pretty much my dream job. It was interesting to see how other students, notably John, didn’t share my enthusiasm as their career goal. Dowdall’s job did sound stressful at times but I wouldn’t trade the opportunity to work with professionals for anything.

who wouldn't want Peter's job?

[1] Peter Dowdall, "Music Technology Forum: Semester 1 - Week 8 – Sound Engineering". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 8th May 2008

[2] wallpaper, Britney Spears. www.skins.be/britney-spears (Accessed 9/5/8)

CC3 Week 7 - Synthesiser Definitions II

//PART ONE - CODE BORROWED FROM JOHN DELANY

(

SynthDef("IndustrialAmbience", {

arg freq = 100, rate = 0, contFreq = 100, i;

var env, osc, hit, sin, dur = 10, sinDec, envStruct, envGen;

hit = Decay2.ar(Impulse.ar(rate,0,0.3), 0.03, 0.3);

sinDec = Decay2.ar(Impulse.ar(rate,0,0.3), 0.01, 0.3);

sin = SinOsc.ar(freq: contFreq*(i+2), mul: sinDec);

osc = Klank.ar(`[Array.series(10, 50, contFreq, 50),

Array.series(10, 0.8, -0.1)], ClipNoise.ar([0.005,0.005])

);

// Envelope Structure

envStruct = Env(

levels: [0.1, 1, 0],

times: [1.0, 3.0],

curve: 'welch'

).plot;

// Envelope Instance

envGen = EnvGen.kr(

envelope: envStruct,

gate: 1.0,

doneAction: 2.0

);

Out.ar(0, osc * envGen); // Outputs to busses 0 and 1

}).send(s);

)

a = Synth("IndustrialAmbience", [\contFreq, 10000, \rate, 9]);

//PART TWO - MY SYNTH DEF

// Here is the sample for part two.

b = Buffer.read(s, "sounds/Producers @ The Electric Light Hotel.wav");

(

SynthDef("BufferPlay", {

arg speed = 1, amp = 1, pan = 0, mFreq, mAmp;

var sig, pan2, envStruct, envGen, mod, car, out;

sig = PlayBuf.ar(1, b.bufnum, BufRateScale.kr(b.bufnum) * speed, loop: 1.0);

pan2 = Pan2.ar(sig, pan);

mod = SinOsc.ar(freq: mFreq, mul: mAmp);

// Envelope Structure

envStruct = Env(

levels: [0, 1, 0.5, 0.3, 0],

times: [2.0, 1.0, 3.0, 10.0],

curve: 'lin'

).plot;

// Envelope Instance

envGen = EnvGen.kr(

envelope: envStruct,

gate: 1.0,

doneAction: 2.0

);

Out.ar(bus: 0, channelsArray: amp * pan2 * mod* envGen);

}).send(s);

)

a = Synth("BufferPlay", [\speed, 1, \pan, -1, \mFreq, 1, \mAmp, 1]);

a = Synth("BufferPlay", [\speed, 2, \pan, 1, \mFreq, 1, \mAmp, 1]);

a = Synth("BufferPlay", [\speed, 4, \pan, 0, \mFreq, 3, \mAmp, 1]);

// PART 3 - MIDI control

(

MIDIClient.init;

MIDIClient.sources;

MIDIIn.connectByUID(inport: 0, uid: MIDIClient.sources.at(0).uid);

)

(

MIDIIn.noteOn = {

arg src, chan, num, vel;

[chan,num,vel].postln;

a = Synth("BufferPlay", [\speed, 1, \pan, -1, \mFreq, 1, \mAmp, 1]);

a.set(\speed, num.linlin(0, 127, -11, 10).postln);

};

MIDIIn.noteOff = {};

)

(

MIDIIn.control = {

arg src, chan, num, val;

[src, chan,num,val].postln;

a.set(\mFreq, (val * 1.5));

};

)

// PART FOUR - Sound example of PART TWO

[1] Christian Haines. "Creative Computing: Semester 1 - Week 7 - Synthesiser Definitions II". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 17th April 2008

Week 7 Forum - Tristram Carey [2]

[2]

Before today I didn't know much about Tristram Carey. I feel quite lucky that one of the most successful electronic musicians of all time has had a big part at this university. Stephen's presentation on his life was quite interesting and I appreciate the work he did.

The VCS was a great invention by Carey and even after so many years it still has a distinctive analogue sound. I could see myself implementing that synth in the music I make now. When Stephen told us the story of how Carey used post World War II devices to make his instruments, I found that very interesting.

Where are the innovative musicians of today? I guess the next step is to build an instrument out of nuclear weapons or instruments of mass destruction . . .

[1] Stephen Whittington, "Music Technology Forum: Semester 1 - Week 7 – Tristram Carey". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 1st May 2008

[2] Electronic Music Uni, University of Adelaide. www.emu.adelaide.edu.au (Accessed 2/5/8)