Week 12 Forum - Scratch II

We were going to have some student presentations today but once again both students didn't show up. That's a bit of a worry really...two weeks in a row?

Anyway Stephen came to the rescue and played us more of that awesome Scratch DVD. After watching the scratching lessons, by Qbert, I decided to put it into practice. Well to be honest I already knew a little about scratching but I'm not a scratch DJ. I am a mix DJ. I have been a DJ for around 5 years and have played in heaps of clubs around Adelaide. I've used everything from vinyl to CD to mixing mp3s. I still prefer old school vinyl.

Mixing and scratching are not easy techniques. I'm glad that we had touched on this topic in forum so people can get the idea out of their heads that DJs "just play music". It's so much more than that. Aside from the skills of mixing there is also the skills a DJ needs to work the crowd. You need to play certain songs at certain times so the crowd will buy drinks. e.g. If you play too many good songs, people will stay on the dance floor and not buy drinks. You need to work the crowd and make them do what you want them to do. I shouldn't give away too many secrets!

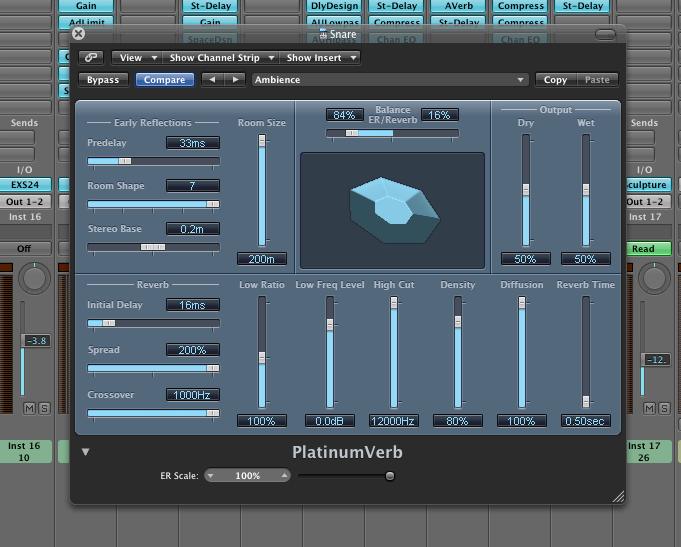

As a mix DJ, a common skill is to play two songs together to create a new song. I have done this in my demonstration. As a scratch DJ, there are skills of dropping in vocal stabs and samples to create new sounds. There is a lot of skill involved in DJing and I think the many talentless big heads out in Adelaide have given the rest a bad name.

I have prepared a little movie of my mixing. It's a bit long but you can just fast forward if you're bored. EnjoY!

DJ Reverie in the mix!

my record collection is around 500 so I still have a bit to go before I can compare to DJ Shadow. . .

my record collection is around 500 so I still have a bit to go before I can compare to DJ Shadow. . .a typical Saturday night. . .

[1] Stephen Whittington, "Music Technology Forum: Semester 1 - Week 12 – Scratch". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 5th June 2008