Monday, November 10, 2008

CC3 Major Project - Record-Madness!

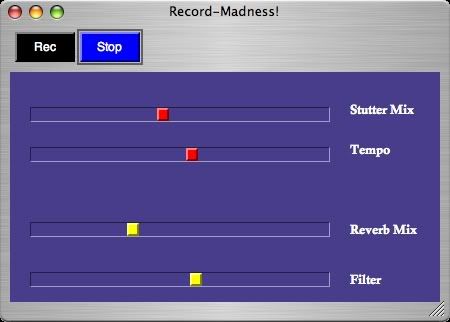

My project is a performance patch that incorporates the user recording an input into the patch and then playing back the sample and processing the patch through SuperCollider. The processes that SuperCollider performs on the patch are:

1) BBCut

2) Tempo

3) Filter

4) Reverb

This results in the playback cutting up the sample and creating stutter effects. Along with this is the ability to speed up or slow down the sample playback. There is also a slider to control a reverb effect and a filter effect. The filter is a bandpass filter. Below is a screenshot of the patch and the elements to download.

CODE

DOCUMENTATION

AUDIO [4.6MB] (low quality)

AUDIO [12.8MB] (high quality)

Sunday, November 09, 2008

Week 12 Forum - Final Form....ever

I didn't come to this forum because I had to finish a presentation for another subject. But, I know what it was about and it was the typical form. Babbling and blather and blah blah blah blah blah....etc

From speaking to other students I know some of the forum touched on what students thought of the degree. If this degree taught me anything it is this:

If you want to study something that will give you the piece of paper that people will think highly of but will be completely useless in the actual work force, go to university.

If you want to study something that will give you the piece of paper that people don't regard highly but will actually teach you useful skills relevant to the work force, go to any vocational training institution.

Unless you want to be a doctor, engineer, lawer or businessman, university is pretty much useless. It just tries to make you an intellect.

[1] Stephen Whittington, "Music Technology Forum: Semester 2 - Week 12 – Babble". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 6th November 2008

Week 11 Forum - Electroacoustic Music

Today we watched some movies that had some music written by some famous composers. I was particularly interested when Stephen mentioned that John Chowning made millions off something. I should've paid more attention to what he was saying. My ears pricked up when I heard "musician" and "millionaire". You don't hear those two words in one sentence music here at all.

[1] Stephen Whittington, "Music Technology Forum: Semester 2 - Week 11 – Electroacoustic Music". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 23rd October 2008

Wednesday, November 05, 2008

Week 10 Forum - Honors Presentation

Today we had two presentations. They were from Poppi Doser and Martin Victory.

Poppi presented a movie with an animation student she has been working with for a few years. Poppi's work always seems to be of very high quality. I think the way she interprets media is quite good. The music suited to visuals quite well.

Martin presented us with a complicated SuperCollider patch that interpreted network traffic into music. This is defiantly an original idea but I don't see it being commercial viable unless the patch can differentiate between certain data. What would be really cool if it was possible to generate certain music based on what material was being searched. I don't think this is possible but it's worth a shot. I think Martin's original idea is still pretty impressive and I'm curious to see how he explores this topic further.

[1] Poppi Doser & Martin Victory, "Music Technology Forum: Semester 2 - Week 10 – Honors Presentation". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 16th October 2008

Sunday, November 02, 2008

AA3 Week 10 - Project So Far

This is my progress so far. We were meant to have a rough cut by week 10 but clearly I don't have that. I have employed my sounds so far with some music but there is still some work left to go.

[1] Luke Harrald. "Audio Arts: Semester 2 - Week 10 - Rough Cut". Lecture presented at EMU, University of Adelaide, South Australia, 14th October 2008

Week 9 Forum - 3rd Year Presentations

[1]

Today we had presentation by the third years. I was impressed by how many students decided to use surround sound in their works. I haven't used surround in my work but not because it doesn't interest me. I just haven't found a use for it yet. I do want to learn how to mix in surround properly for future work. I thought Ben's piano piece was nice and Jake's piece sounded great with the surround sounds pulsating through the room. Dave's piece was also quite good.

[1] 100Cute, Technology, Celebrity & Fashion Blog. http://www.100cute.com/design-your-own-surround-sound-spherical-speakers-for-the-real-bang.html (Accessed 10/10/8)

[2] Stephen Whittington & 3rd Year Students, "Music Technology Forum: Semester 2 - Week 9 – Student Presentations". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 9th October 2008

CC3 Week 9 - Spatialisation

AUDIO [1.7MB]

(

var sbs;

~gFreqMenu = 0;

~gFreq = 80;

~gAmp = 0.05;

~gDur = 1.0;

~gWait = 0.01;

~gPanX = 1;

~gPanY = 1;

SynthDef("FSinOsc", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0, xpan= 1, ypan=1;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = FSinOsc.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: Pan4.ar(out,xpan, ypan)

)

}).send(s);

SynthDef("SinOsc", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0, xpan= 1, ypan=1;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = SinOsc.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: Pan4.ar(out,xpan, ypan)

)

}).send(s);

SynthDef("LFTri", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0, xpan= 1, ypan=1;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = LFTri.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: Pan4.ar(out,xpan, ypan) )

}).send(s);

SynthDef("Pulse", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0, xpan= 1, ypan=1;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = Pulse.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: Pan4.ar(out,xpan, ypan) )

}).send(s);

SynthDef("Saw", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0, xpan= 1, ypan=1;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = Saw.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: Pan4.ar(out,xpan, ypan)

)

}).send(s);

//w.close;

// WHOLE WINDOW

w = SCWindow.new.front;

// Parameters

w.bounds = Rect(170,150,450,380); // Rectangular Window Bounds (Bottom Left Width Height)

w.name_("Grainulator"); // Window Name

w.alpha_(0.8); // Transparency

// w.boxColor_(Color.black);

// PANEL

e = SCCompositeView(w,Rect(10,50,430,310));

e.background = Color.new255(100,0,50);

//Gradient(Color.blue,Color.black);

// DROP DOWN MENU (WAVEFORM)

l = [

"Fast Sine Wave","Sine Wave","Triangle Wave","Square Wave","Saw Tooth Wave"];

sbs = SCPopUpMenu(w,Rect(10,10,150,20));

sbs.items = l;

sbs.background_(Color.new255(100,0,50));

sbs.stringColor_(Color.white);

sbs.action = { arg sbs;

~gFreqMenu = sbs.value.postln;

};

// 4 CHANNEL PANNER

v = SC2DSlider(w, Rect(300, 1, 45, 45))

.x_(0.5) // initial location of x

.y_(1) // initial location of y

.action_({|sl|

~gPanX = (sl.x*2)-1;

~gPanY = (sl.y*2)-1;

});

// TEXT (FREQ)

r = SCStaticText(e, Rect(350, 80, 100, 20));

r.stringColor_(Color.white);

r.string = "Frequency";

r.font_(Font("Arial", 12));

// SLIDER (FREQ)

r = SCSlider(e, Rect(30, 85, 300, 15))

//.lo_(0.001)

//.hi_(1)

//.range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

//slider.lo=0;

// slider=0;

//r.value=""++(slider.hi*2000) ++"Hz";

r.value=""++(slider.value*2000) ++"Hz";

// ~gFreq = slider.hi*2000;

~gFreq = slider.value*2000;

r.stringColor_(Color.white);

// [\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (FREQ)

r = SCTextField(

parent: e,

bounds: Rect(350, 110, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

// TEXT (AMP)

t = SCStaticText(e, Rect(350, 150, 100, 20));

t.stringColor_(Color.white);

t.string = "Amplitude";

t.font_(Font("Arial", 12));

// SLIDER (AMP)

t = SCSlider(e, Rect(30, 85+70, 300, 15))

// .lo_(0.0001)

// .hi_(1)

//.range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

// slider=0;

t.value=""++(slider.value*1) ++"";

~gAmp = slider.value*0.1;

t.stringColor_(Color.white);

// [\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (AMP)

t = SCTextField(

parent: e,

bounds: Rect(350, 180, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

// TEXT (DUR)

u = SCStaticText(e, Rect(350, 220, 150, 20));

u.stringColor_(Color.white);

u.string = "Duration";

u.font_(Font("Arial", 12));

// SLIDER (DUR)

u = SCSlider(e, Rect(30, 225, 300, 15))

// .lo_(0.001)

// .hi_(1)

// .range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.blue))

.action_({ |slider|

// slider=0;

u.value=""++(slider.value*1) ++"s";

~gDur = slider.value*1;

u.stringColor_(Color.white);

//[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (DUR)

u = SCTextField(

parent: e,

bounds: Rect(350, 250, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

// TEXT (WAIT)

p = SCStaticText(e, Rect(350, 290, 150, 20));

p.stringColor_(Color.white);

p.string = "Iteration Time";

p.font_(Font("Arial", 12));

// SLIDER (WAIT)

p = SCSlider(e, Rect(30, 295, 300, 15))

// .lo_(0.001)

// .hi_(1)

// .range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

// slider.lo=0;

p.value=""++(0.01/slider.value*1) ++"s";

~gWait = 0.01/slider.value*1;

p.stringColor_(Color.white);

// [\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (WAIT)

p = SCTextField(

parent: e,

bounds: Rect(350, 320, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

Routine({ s.sync;

inf.do({

~gFreqMenu.switch(0,{Synth("FSinOsc", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur, \xpan, ~gPanX, \ypan, ~gPanY])},

1,{Synth("SinOsc", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur, \xpan, ~gPanX, \ypan, ~gPanY])},

2,{Synth("LFTri", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur, \xpan, ~gPanX, \ypan, ~gPanY])},

3,{Synth("Pulse", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur, \xpan, ~gPanX, \ypan, ~gPanY])},

4,{Synth("Saw", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur, \xpan, ~gPanX, \ypan, ~gPanY])}

);

// Create Grain

// Synth("Pulse", [\gFreq, {rrand(80, 300).postln}, \gAmp, 0.05, \gDur, 1.0]);

// [1000, 8000] [200, 400]

// Grain Delta - 0.1, 0.01

~gWait.wait;

})

}).play;

)

[1] Christian Haines. "Creative Computing: Semester 2 - Week 9 - Spatialisation". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 23th October 2008

Saturday, November 01, 2008

Week 8 Forum - My Favourite Things III

Today we watched a David Lynch film called Eraserhead. I found this movie to be weird and boring. It is not something I'd bother watching again. Maybe I just didn't get it, but either way I found it uninteresting.

[1] David Harris, "Music Technology Forum: Semester 2 - Week 8 – My Favorite Things III". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 18th September 2008

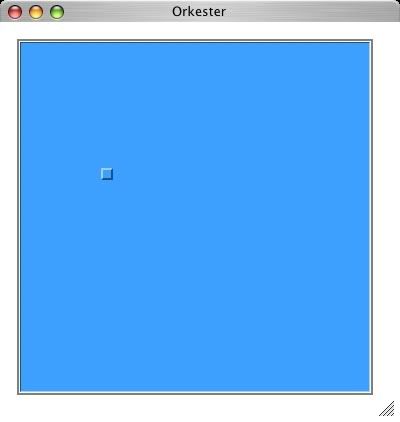

CC3 Week 8 - FFT II

I extended my patch further from the previous FFT week. I have used the same panner but the X-Y coordinates control different parameters. The X coordinate controls the string sample and the Y coordinate controls the saw wave synth def. By moving the panner in different directions the freeze point of each sound changes. If the panner is on the bottom left corner, both sounds play as normal. If it is on the top right corner, both sounds freeze. If it is on the top left corner the string sounds continues to play and the saw wave freezes and if it is on the bottom right the string sound freezes and the saw wave continues to play.

I have an audio example of me messing around with the panner. Click here to have a listen. [1.7MB]

s.boot;

(

b = Buffer.alloc(s,1024);

c = Buffer.read(s,"sounds/100- strings.wav");

// string sample [3.6MB]

SynthDef("help-freeze", { arg out=0, bufnum=0, x=0;

var in, chain;

in = Saw.ar(LFNoise1.kr(5.2,250,400), 0.25);

chain = FFT(bufnum, in);

chain = PV_Freeze(chain, x > 0.5);

Out.ar(out, 0.5 * IFFT(chain).dup);

}).send(s);

SynthDef("help-magFreeze2", { arg out=0, bufnum=0, soundBufnum=2, y=0;

var in, chain;

in = PlayBuf.ar(1, soundBufnum, BufRateScale.kr(soundBufnum), loop: 1);

chain = FFT(bufnum, in);

chain = PV_Freeze(chain, y > 0.5 );

Out.ar(out, 0.5 * IFFT(chain).dup);

}).send(s);

)

(

t = Synth("help-freeze",[\out, 0, \bufnum, b.bufnum]);

u = Synth("help-magFreeze2",[\out, 0, \bufnum, b.bufnum, \soundBufnum, c.bufnum]);

w = SCWindow("Orkester", Rect(100, 500, 400, 400))

.front;

w.view.background_(Color.white);

w.alpha = 0.8;

p = SC2DSlider(w, Rect(20, 20,350, 350))

.x_(0.5) // initial location of x

.y_(1) // initial location of y

.action_({p.background = (Color.new(p.x, p.y, 200, 1));

t.set(\x, p.x);

u.set(\y, p.y);

});

//w.front;

);

[1] Christian Haines. "Creative Computing: Semester 2 - Week 8 - FFT III". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 18th September 2008

Tuesday, September 09, 2008

Week 7 Forum - 2nd / 3rd Year Presentations

This week's forum was on presentations. I presented my SuperCollider piece from last semester. I had the same problem when executing the code as I did when I was doing the assignment. That was the second part of the piece didn't play. It's a strange problem as it depends on the computer. It didn't matter so much as I just played the audio recording. I don't know what people thought of my piece. Personally I think it's a collection of meaningless sounds that equates to nothing. Surprisingly I got a good mark for it!

I was going to play my latest song that has been signed to a record label in Melbourne. I decided not too seeing as though the majority of EMU don't appreciate real music and would rather listen to sine tones.

[1] Stephen Whittington, "Music Technology Forum: Semester 2 - Week 7 – 2nd / 3rd Year Presentations". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 11th September 2008

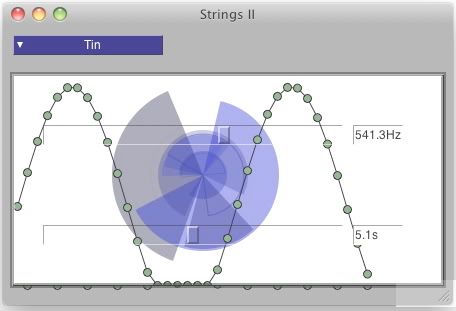

CC3 Week 7 - GUI III

I have incorporated the IXI GUI code of a fancy spinning circle with a waveform oscillating. The GUI doesn't have any function in the patch I have just used it for visual feedback. The animation goes for as long as the duration of the note. I tried to change some of the parameters in the IXI code to change the way it behaves but for some reason SuperCollider kept crashing. So I have used the default code and have just changed the dimensions and colour.

Incase you forgot, you trigger the notes by clicking on the background and control the frequency and duration of the notes using the sliders. The drop down menu changes the timbre of the notes.

//PINK NOISE

(

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-pink", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = PinkNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//WHITE NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-white", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = WhiteNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//BROWN NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-brown", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = BrownNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//CLIP NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-clip", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = ClipNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//GREY NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-grey", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = GrayNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

// w.close;

// WHOLE WINDOW

w = SCWindow.new.front;

w.bounds = Rect(170,150,450,280);

w.name_("Strings II");

w.alpha_(0.8);

p = ParaSpace.new(w, bounds: Rect(10,50,430,210));

p.setShape_("circle");

36.do({arg i;

p.createNode(3+(i*10), 130)

});

35.do({arg i;

p.createConnection(i, i+1);

});

p.setBackgrDrawFunc_({

10.do{

Color.blue(rrand(0.0, 1), rrand(0.0, 0.5)).set;

Pen.addWedge(200@150, rrand(10, 100), 2pi.rand, 2pi.rand);

Pen.perform([\stroke, \fill].choose);

}

});

t = Task({

inf.do({arg i;

36.do({arg j;

(3+((j%36)*10));

p.setNodeLoc_(j, 3+((j%36)*10), ((i*j/100).sin*120)+130);

});

0.01.wait;

})

}, AppClock);

//// PANEL

//e = SCCompositeView(w,Rect(10,50,430,210));

//e.background = Color.new255(100,0,50);

////Gradient(Color.blue,Color.black);

// TRIGGER BUTTON

f = SCButton(w, Rect(10,50,430,210))

.states_([

[" ", Color.white, Color.new255(10,0,100,0.1)]

])

.font_(Font("Arial", 10))

.action_({r.reset;

r.play;

~noisetype.switch(0,{Synth("plucked-pink", [\midiPitch, ~pitch, \duration, ~dur])},

1,{Synth("plucked-white", [\midiPitch, ~pitch, \duration, ~dur])},

2,{Synth("plucked-brown", [\midiPitch, ~pitch, \duration, ~dur])},

3,{Synth("plucked-clip", [\midiPitch, ~pitch, \duration, ~dur])},

4,{Synth("plucked-grey", [\midiPitch, ~pitch, \duration, ~dur])});

});

// DROP DOWN MENU

l = [

"Twang","Harsh","Warm","Tin","Nylon"];

m = SCPopUpMenu(w,Rect(10,10,150,20));

m.items = l;

m.background_(Color.new255(10,0,100));

m.stringColor_(Color.white);

m.action = { arg m;

~noisetype = m.value.postln};

//c.value=""++(slider.hi) ++"Hz";

//PITCH

c = SCTextField(w, Rect(350, 100, 50, 20));

a = SCSlider(w, Rect(40, 100, 300, 20))

.focusColor_(Color.red(alpha:0.2))

.action_({

c.value_(""++((a.value*70)+30).midicps.round(0.1)++"Hz");

~pitch = ((a.value*70)+30)

});

//DURATION

d = SCTextField(w, Rect(350, 200, 50, 20));

b = SCSlider(w, Rect(40, 200, 300, 20))

.focusColor_(Color.red(alpha:0.2))

.action_({

d.value_(""++((b.value*10)+0.1).round(0.1)++"s");

~dur = ((b.value*10)+0.1)

});

r = Routine({

t.resume;

~dur.wait;

t.pause;});

a.valueAction = 0.5;

b.valueAction = 0.5;)

[1] Christian Haines. "Creative Computing: Semester 2 - Week 7 -GUI III". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 11th September 2008

AA3 Week 7 - Randy Thom

I appreciated Randy Thoms approach to sound design. It is important to plan the sound and music with the actual visual aspects of the film and not just leave it till the end. In my experience (which isn’t a lot) of working with visual media I have always been presented with the finished movie and asked to write music for it. I had no control over the planning an production of the work. Unfortunately this is always due the either budget or attitude of the producers.

I had a look at the movies that Randy Thom did the sound design for. I have only seem a small number of his many films:

Final Fantasy: The Spirits Within

What Lies Beneath

Snow Falling on Cedars

Jumanji

Miracle on 34th Street

Forrest Gump

[1]

All these films posses very good sound design. I particually enjoyed the film What Lies Beneath. This was actually one of the films that interested me in film sound to begin with.

[3]

[1] imdb, 'Randy Thom'. www.imdb.com/name/nm0858378/ (Accessed 11/9/8)

[2] Luke Harrald. "Audio Arts: Semester 2 - Week 7 - Randy Thom". Lecture presented at EMU, University of Adelaide, South Australia, 9th September 2008

[3] Skywalker Sound, 'Randy Thom'. www.skysound.com (Accessed 11/9/8)

Saturday, September 06, 2008

Week 6 Forum - Practical Composition Workshop IB

[2]

This week we finished our task from a couple weeks ago of making sounds that represent particular emotions. I have my sounds listed below for you to listen to. I have deliberately not showed what each sound is so you can have a proper guess. The answers are here.

I think some are more obvious than others. It was hard to distinguish sounds that represent similar emotions (e.g. happiness and love).

Sound 1

Sound 2

Sound 3

Sound 4

Sound 5

Sound 6

Sound 7

Sound 8

Sound 9

[1] Stephen Whittington, "Music Technology Forum: Semester 2 - Week 6 – Practical Composition Workshop II". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 4th September 2008

[2] TechDoctor Company. "A Cure For Your Tech Headaces". www.techdoctorcompany.com/homeoffice (Accessed 9/9/8)

Friday, September 05, 2008

CC3 Week 6 - Physical Modelling I

I have created a Karplus-Strong String Synthesis patch with some parameters on the GUI to control the sound. I have a drop down menu to select the timbre of the sound. This is actually changing the type of noise that is being triggered. I also have a frequency slider that obviously selects the frequency of the string. Finally I have a duration slider to select how long the notes are.

To trigger the notes simply click anywhere in the window. I have a button that covers the background so you can just click and hear the notes.

Click here for an audio example of my patch. [1.7MB]

//PINK NOISE

(

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-pink", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = PinkNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//WHITE NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-white", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = WhiteNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//BROWN NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-brown", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = BrownNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//CLIP NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-clip", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = ClipNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

//GREY NOISE

// Delayed Noise Generator - Envelope > Noise Generator > Delay

SynthDef("plucked-grey", {

// Arguments

arg midiPitch = 80, duration = 10;

// Variables

var burstEnv, noise, delay, att = 0, dec = 0.001, out,

delayTime = midiPitch.midicps.reciprocal, trigRate = 1;

// Envelope

burstEnv = EnvGen.kr(

envelope: Env.perc(att, dec)

);

// Noise Generator

noise = GrayNoise.ar(

mul: burstEnv

);

// Delay

delay = CombL.ar(

in: noise,

maxdelaytime: delayTime,

delaytime: delayTime,

decaytime: duration,

add: noise

);

// Output

Out.ar(

bus: 0,

channelsArray: delay.dup

);

}).send(s);

w.close;

// WHOLE WINDOW

w = SCWindow.new.front;

w.bounds = Rect(170,150,450,280);

w.name_("Strings");

w.alpha_(0.8);

// PANEL

e = SCCompositeView(w,Rect(10,50,430,210));

e.background = Color.new255(100,0,50);

//Gradient(Color.blue,Color.black);

// TRIGGER BUTTON

f = SCButton(w, Rect(10,50,430,210))

.states_([

[" ", Color.white, Color.new255(10,0,100)]

])

.font_(Font("Arial", 10))

.action_({

~noisetype.switch(0,{Synth("plucked-pink", [\midiPitch, ~pitch, \duration, ~dur])},

1,{Synth("plucked-white", [\midiPitch, ~pitch, \duration, ~dur])},

2,{Synth("plucked-brown", [\midiPitch, ~pitch, \duration, ~dur])},

3,{Synth("plucked-clip", [\midiPitch, ~pitch, \duration, ~dur])},

4,{Synth("plucked-grey", [\midiPitch, ~pitch, \duration, ~dur])});

});

// DROP DOWN MENU

l = [

"Twang","Harsh","Warm","Tin","Nylon"];

m = SCPopUpMenu(w,Rect(10,10,150,20));

m.items = l;

m.background_(Color.new255(10,0,100));

m.stringColor_(Color.white);

m.action = { arg m;

~noisetype = m.value.postln};

//c.value=""++(slider.hi) ++"Hz";

//PITCH

c = SCTextField(w, Rect(350, 100, 50, 20));

a = SCSlider(w, Rect(40, 100, 300, 20))

.focusColor_(Color.red(alpha:0.2))

.action_({

c.value_(""++((a.value*70)+30).midicps.round(0.1)++"Hz");

~pitch = ((a.value*70)+30)

});

//DURATION

d = SCTextField(w, Rect(350, 200, 50, 20));

b = SCSlider(w, Rect(40, 200, 300, 20))

.focusColor_(Color.red(alpha:0.2))

.action_({

d.value_(""++((b.value*10)+0.1).round(0.1)++"s");

~dur = ((b.value*10)+0.1)

});

)

[1] Christian Haines. "Creative Computing: Semester 2 - Week 6 - Physical Modelling I". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 4th September 2008

Watch out for the Shaq Attack!

I thought today’s presentations went well. It was good to see where everyone else is. Unfortunately I didn't have any sounds to show because I am still in the organization stage of my project. I'm not going to record anything until I know every single sound I need for the film. Also, it's not due for a long time so I still have plenty of time.

I don't know the students name, but the music played by the composition student was very good. I also liked the music from Ben and also Jake.

Great sound design from John, Dave and Luke. It was interesting to see how everyone is taking a slightly different approach to this project and I look forward to seeing all the final results.

[1] Luke Harrald. "Audio Arts: Semester 2 - Week 6 - Presentations - Nosferatu". Lecture presented at EMU, University of Adelaide, South Australia, 2nd September 2008

Sunday, August 31, 2008

Week 5 Forum - My Favourite Things II

Christianity is not stupid and communism is not good!

This weeks forum class was a bit controversial. It takes a lot to offend me, so I wasn't offended. But, I defiantly would not want my name on a song like that. Religion and politics are the two topics you shouldn't discuss unless you're willing to have conflict.

Personally I would never want to write music about these two topics because I like to keep my views to myself and not expose them to public. Then again, I do admire artists that tastefully write music about important issues.

I think the band Negativland are ignorant doing what they're doing. I would be ashamed and embarrassed to be famous on their song. It's probably the only way I would reject being successful as a commercial artist. Using sex appeal to sell music is common and not as bad. This is just wrong.

[1] Stephen Whittington, "Music Technology Forum: Semester 2 - Week 5 – My Favourite Things II". Lecture presented at the Electronic Music Unit, Schulz 1009, University of Adelaide, South Australia, 28th August 2008

Friday, August 29, 2008

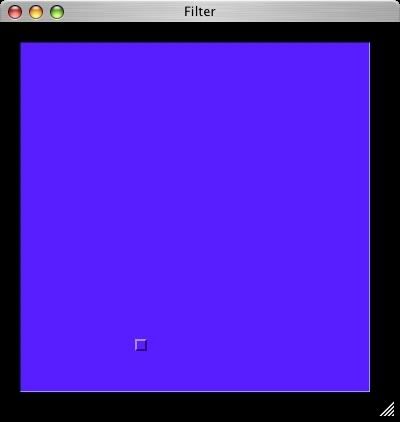

CC3 Week 5 - FFT I

This week I created a GUI on some code that was generating FFT on white noise. Using the examples we went through in class, I used a slider to control the X-Y co-ordinates instead of the mouse.

I was exploring the GUI examples more thoroughly and found a slider that controlled the window colour. I used the same concept and made the slider change the colour of the slider itself.

Click here for an audio example of my patch. [1.7MB]

b = Buffer.alloc(s,2048,1);

(

SynthDef("help-rectcomb2", { arg out=0, bufnum=0, x=0, y=0;

var in, chain;

in = WhiteNoise.ar(0.2);

chain = FFT(bufnum, in);

chain = PV_RectComb(chain, x, y, 0.2);

Out.ar(out, IFFT(chain).dup);

}).send(s);

x = Synth("help-rectcomb2");

w = SCWindow("Filter", Rect(100, 500, 400, 400))

.front;

w.view.background_(Color.black);

w.alpha = 0.8;

p = SC2DSlider(w, Rect(20, 20,350, 350))

.x_(0.5) // initial location of x

.y_(1) // initial location of y

.action_({p.background = (Color.new(p.x, p.y, 200, 1));

x.set(\x, p.x*32);

x.set(\y, p.y);

// [\sliderX, p.x, \sliderY, p.y].postln;

});

//w.front;

);

[1] Christian Haines. "Creative Computing: Semester 2 - Week 5 - FFT I". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 28th August 2008

Week 4 Forum - Practical Composition Workshop IA

I wrote my entire blog entry for this week and then my computer had a fit and I lost it. I cannot be stuffed writing it up again and since no one reads my blog I'm not going to.

[1] Stephen Whitting & David Harris, "Music Technology Forum: Semester 2 - Week 4 – Practical Composition Workshop". Lecture presented at the Electronic Music Unit, EMU Space, University of Adelaide, South Australia, 21st August 2008

Tuesday, August 26, 2008

CC3 Week 4 - Splice & Dice

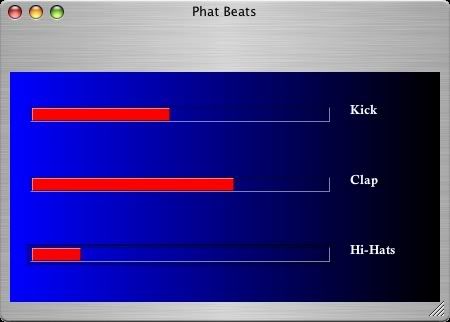

I'm not convinced that my patch even works this week which is unfortunate because it looks good! I have used the example code and have my drum parts playing as a break beat with the stuttering. However the stuttering isn't controlling how I want it to. The stutter-chance is meant to be controlled using the sliders.

Below is my code. For an audio example click here [1.7MB].

You will need to place these samples [204KB] on the desktop for my code to work.

(

// Variables

var kick, snare, hats, clock1, clock2, clock3, cutkick, cutsnare, cuthats, cutprockick, cutprocsnare, cutprochats, sbs;

~kickstutterchance = 0.5;

~snarestutterchance = 0.5;

~hatsstutterchance = 0.5;

// WHOLE WINDOW

w = SCWindow.new.front;

// Parameters

w.bounds = Rect(170,150,450,300); // Rectangular Window Bounds (Bottom Left Width Height)

w.name_("Phat Beats"); // Window Name

w.alpha_(0.8); // Transparency

// w.boxColor_(Color.black);

// PANEL

e = SCCompositeView(w,Rect(10,50,430,230));

e.background = Gradient(Color.blue,Color.black);

// Color.new255(100,250,50);

// TEXT (KICK)

n = SCStaticText(e, Rect(350, 80, 100, 20));

n.stringColor_(Color.white);

n.string = "Kick";

n.font_(Font("CalisMTBol", 13));

// SLIDER (KICK)

r = SCRangeSlider(e, Rect(30, 85, 300, 15))

.lo_(0.001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.red, Color.red, Color.red))

.action_({ |slider|

slider.lo=0;

~kickstutterchance = slider.hi;

// r.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

//// NUMBER BOX (KICK)

// r = SCTextField(

// parent: e,

// bounds: Rect(350, 110, 50, 15) // Left Top Width Height

// ).boxColor_(Color.new255(100,0,50));

//

// TEXT (SNARE)

t = SCStaticText(e, Rect(350, 150, 100, 20));

t.stringColor_(Color.white);

t.string = "Clap";

t.font_(Font("CalisMTBol", 13));

// SLIDER (SNARE)

t = SCRangeSlider(e, Rect(30, 85+70, 300, 15))

.lo_(0.0001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.red, Color.red, Color.red))

.action_({ |slider|

slider.lo=0;

~snarestutterchance = slider.hi;

//t.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

//

//// NUMBER BOX (SNARE)

// t = SCTextField(

// parent: e,

// bounds: Rect(350, 180, 50, 15) // Left Top Width Height

// ).boxColor_(Color.new255(100,0,50));

// TEXT (HATS)

u = SCStaticText(e, Rect(350, 220, 150, 20));

u.stringColor_(Color.white);

u.string = "Hi-Hats";

u.font_(Font("CalisMTBol", 13));

// SLIDER (HATS)

u = SCRangeSlider(e, Rect(30, 225, 300, 15))

.lo_(0.001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.red, Color.red, Color.red))

.action_({ |slider|

slider.lo=0;

~hatsstutterchance = slider.hi;

// u.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

//// NUMBER BOX (HATS)

// u = SCTextField(

// parent: e,

// bounds: Rect(350, 250, 50, 15) // Left Top Width Height

// ).boxColor_(Color.new255(100,0,50));

//

// Setup & Initialise Scheduling Clock

clock1 = ExternalClock(

tempoclock: TempoClock(tempo: 2.7725) // 166.35 BPM

);

// Setup & Initialise Scheduling Clock

clock2 = ExternalClock(

tempoclock: TempoClock(tempo: 2.7725) // 166.35 BPM

);

// Setup & Initialise Scheduling Clock

clock2 = ExternalClock(

tempoclock: TempoClock(tempo: 2.7725) // 166.35 BPM

);

// Start Routine

Routine.run({

// Cut Data -- buffer data and segmentation

kick = BBCutBuffer(

filename: "/Users/student/Desktop/kick.wav",

beatlength: 4 // number of beats in soundfile (estimates source tempo)

);

// Cut Data -- buffer data and segmentation

snare = BBCutBuffer(

filename: "/Users/student/Desktop/snare.wav",

beatlength: 4 // number of beats in soundfile (estimates source tempo)

);

// Cut Data -- buffer data and segmentation

hats = BBCutBuffer(

filename: "/Users/student/Desktop/hats.wav",

beatlength: 2 // number of beats in soundfile (estimates source tempo)

);

s.sync; //this forces a wait for the Buffer to load

// Cutting Synthesiser -- playback for buffer

cutkick = CutBuf2(

bbcutbuf: kick, // buffer to cut up

offset: 0.1, // random cut playback probability

dutycycle: CutPBS1({rrand(0.8,1.0)}, 0) // ratio of inter onset interval (delta!? of cuts)

);

// Cutting Synthesiser -- playback for buffer

cutsnare = CutBuf2(

bbcutbuf: snare, // buffer to cut up

offset: 0.1, // random cut playback probability

dutycycle: CutPBS1({rrand(0.8,1.0)}, 0) // ratio of inter onset interval (delta!? of cuts)

);

// Cutting Synthesiser -- playback for buffer

cuthats = CutBuf2(

bbcutbuf: hats, // buffer to cut up

offset: 0.1, // random cut playback probability

dutycycle: CutPBS1({rrand(0.8,1.0)}, 0) // ratio of inter onset interval (delta!? of cuts)

);

cutprockick = BBCutProc11(

phrasebars: 2, // number of phrase bars

stutterchance: ~kickstutterchance, // phrase tail chance of repetition

stutterspeed: {[4,8].wchoose([0.7,0.3])} // stutterspeed relative to sub division

);

cutprocsnare = BBCutProc11(

phrasebars: 2, // number of phrase bars

stutterchance: ~snarestutterchance, // phrase tail chance of repetition

stutterspeed: {[4,8].wchoose([0.7,0.3])} // stutterspeed relative to sub division

);

cutprochats = BBCutProc11(

phrasebars: 2, // number of phrase bars

stutterchance: ~hatsstutterchance, // phrase tail chance of repetition

stutterspeed: {[4,8].wchoose([0.7,0.3])} // stutterspeed relative to sub division

);

// Cutting Scheduler - cut groups and how they will be cut

z = BBCut2(

cutgroups: cutkick, // array of cut groups

proc: cutprockick // algorithmic routine used to generate the cuts

).play(clock1);

// Cutting Scheduler - cut groups and how they will be cut

y = BBCut2(

cutgroups: cutsnare, // array of cut groups

proc: cutprocsnare // algorithmic routine used to generate the cuts

).play(clock2);

// Cutting Scheduler - cut groups and how they will be cut

x = BBCut2(

cutgroups: cuthats, // array of cut groups

proc: cutprochats // algorithmic routine used to generate the cuts

).play(clock3);

});

clock1.play;

clock2.play;

clock3.play;

)

[1] Christian Haines. "Creative Computing: Semester 2 - Week 4 - Splice & Dice". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 21st August 2008

Monday, August 25, 2008

AA3 Weeks 4 & 5 - Foley II

My progress this week has been organizing how I am going to go about getting all the sounds together for this assignment. I have expanded my plan from last week to include music and sound design elements. There is some overlap between foley and sound design. This is because I think the recorded sounds may need some sound design elements to make the overall sound effects better.

THEME ELEMENTS (MUSIC/SOUND)

- slide music

- Nosferatu

- wife

MUSIC

- music 01 – outdoor setting

- music 02 – Nosferatu running, on roof top, escapes

- music 03 – wife indoors knitting

- music 02 – Nosferatu on the run

- music 04 – Nosferatu on window seal

- music 03 - (alternate) wife sleeping + scared

- music 04 - (alternate) wooden door opens

- music 05 - death

SCENE ENVIRONMENTS

- outside stone walls

- Nosferatu running through streets

- Nosferatu climbing off roof (filter off yelling)

- indoors

- Nosferatu running through scrub

- window seal

- outside

FOLEY

- outdoor ambience

- running footsteps (individual & many)

- rocks landing on ground

- laughing

- people screaming

- rocks bouncing off the roof

- vampire shuffling and climbing off roof

- smoke off the roof

- wife knitting

- wife deep breath

- footsteps on grass

- shuffling through bush

- vampire jumping

- wife sleeping

- wife suddenly awakes scared

- man sleeping

- wife clasping window seal

- wife opens window

- big wooden doors opens

- wife stumbles

- wife pleads for help

- man helps wife on bed

SOUND DESIGN

- slide sound

- dialogue

- evil associated sounds (synth swirl, bass glitches, dissonance)

- indoor pleasing ambience (warm sound)

- outdoor sounds (birds, crickets, trees, wind, scrub)

- running sounds (brushing against bushes)

- signature jump

- beating up fake Nosferatu

- window seal (drone sound)

- peaceful sleeping

- sudden wake up

- breathing

- creaking on window seal

- window open

- big wooden doors open

- death

[1] Luke Harrald. "Audio Arts: Semester 2 - Weeks 4 & 5 - Foley Analysis II - Nosferatu". Lecture presented at EMU, University of Adelaide, South Australia, 19th August 2008

Thursday, August 14, 2008

Week 3 Forum - First Year Student Presentations

[2]

This week's forum was pretty unorganized considering we had a lot of presentations to go through. The presentations were a combination of boring musique concrete pieces, a funny animation, some good mixing skills and some impressive video game music.

I don't know the name of the student, but I was very impressed with the musique concrete piece we were listening to when we were bombarded with the other classical students. Normally I find musique concrete uninspiring, but this piece was very interesting. It's a shame we didn't get to hear it properly.

I thought the animation by Josh was excellent. The music and sound effects were incorporated in the visuals flawlessly.

Alex's mixing of Behind These Walls was excellent too. It's a pity the speakers weren't set up in stereo but from what I heard it sounded great.

The video game music was mint. When he said an Adelaide based company I wonder if he meant Sillhouette Studios? I did some work for them in January this year for an internet flash game.

I don't know the names of most of the first year students, so it makes it harder to write about their presentations. These four presentations were the stand out ones for me.

[1] Lisa Lane-Collins, Josh Thompson, Steven Tanoto, Scott Herriman, Josh Bevan, Alex Bishop, Jacob Simionato, Jamie Seyfang, Scott Kavanagh, Miles Sly, "Music Technology Forum: Semester 2 - Week 3 – First Year Student Presentations". Lecture presented at the Electronic Music Unit, Schulz 1006 & EMU Space, University of Adelaide, South Australia, 14th August 2008

[2] You Say Cement, I Say Concrete, 'Charles & Hudson'. http://www.charlesandhudson.com/archives/2008/05/you_say_cement_i_say_concrete.htm (Accessed 17/8/8)

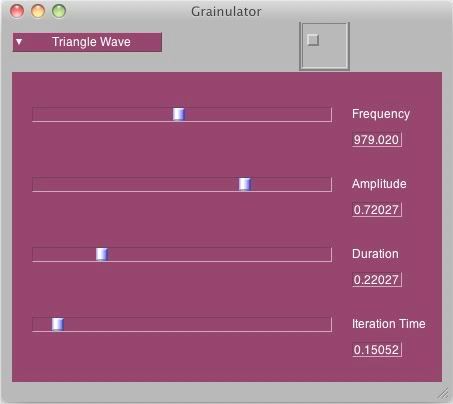

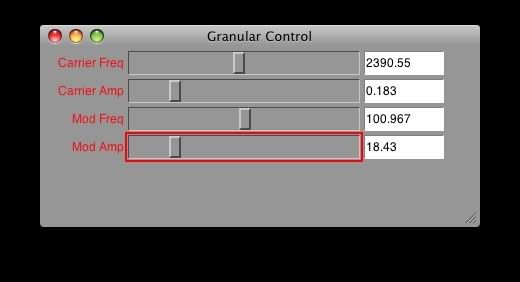

CC3 Week 3 - Granular Synthesis

I ended up choosing the most simple example patch to work with for my granular synthesis patch. This is because I already made a buffer last semester and wanted to change. One really annoying part of this weeks exercise was how I've used the SCRangeSlider. I really should stop using this slider as it isn't designed for what I'm doing. I just prefer how it looks but it makes the process much harder. When testing my patch you need to hold down OPTION when dragging the sliders.

For this patch I have created global variables to control the parameters of the granular synthesiser and also used separate synthdefs for each waveform I have made available. There is a lot of repeated code but at this stage I don't know any other way to code this patch with the same outcome.

The colours are okay. They could be better, but with so much messing around I eventually just wanted to get this done.

(

var sbs;

~gFreqMenu = 0;

~gFreq = 80;

~gAmp = 0.05;

~gDur = 1.0;

~gWait = 0.01;

SynthDef("FSinOsc", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = FSinOsc.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: out.dup

)

}).send(s);

SynthDef("SinOsc", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = SinOsc.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: out.dup

)

}).send(s);

SynthDef("LFTri", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = LFTri.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: out.dup

)

}).send(s);

SynthDef("Pulse", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = Pulse.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: out.dup

)

}).send(s);

SynthDef("Saw", {

// Args

arg gFreq = 440, gAmp = 1.0, gDur = 3.0;

// Vars

var osc, env, envGen, out;

// Unit Generators

osc = Saw.ar(freq: gFreq);

env = Env.sine(

dur: gDur,

level: 1

);

envGen = EnvGen.ar(

envelope: env,

gate: 1,

levelScale: gAmp,

doneAction: 2

);

out = envGen * osc;

// Output

Out.ar(

bus: 0,

channelsArray: out.dup

)

}).send(s);

//w.close;

// WHOLE WINDOW

w = SCWindow.new.front;

// Parameters

w.bounds = Rect(170,150,450,380); // Rectangular Window Bounds (Bottom Left Width Height)

w.name_("Grainulator"); // Window Name

w.alpha_(0.8); // Transparency

// w.boxColor_(Color.black);

// PANEL

e = SCCompositeView(w,Rect(10,50,430,310));

e.background = Color.new255(100,0,50);

//Gradient(Color.blue,Color.black);

// DROP DOWN MENU (WAVEFORM)

l = [

"Fast Sine Wave","Sine Wave","Triangle Wave","Square Wave","Saw Tooth Wave"];

sbs = SCPopUpMenu(w,Rect(10,10,150,20));

sbs.items = l;

sbs.background_(Color.new255(100,0,50));

sbs.stringColor_(Color.white);

sbs.action = { arg sbs;

~gFreqMenu = sbs.value.postln;

};

// TEXT (FREQ)

r = SCStaticText(e, Rect(350, 80, 100, 20));

r.stringColor_(Color.white);

r.string = "Frequency";

r.font_(Font("Arial", 12));

// SLIDER (FREQ)

r = SCRangeSlider(e, Rect(30, 85, 300, 15))

.lo_(0.001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

r.value=""++(slider.hi*2000) ++"Hz";

~gFreq = slider.hi*2000;

r.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (FREQ)

r = SCTextField(

parent: e,

bounds: Rect(350, 110, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

// TEXT (AMP)

t = SCStaticText(e, Rect(350, 150, 100, 20));

t.stringColor_(Color.white);

t.string = "Amplitude";

t.font_(Font("Arial", 12));

// SLIDER (AMP)

t = SCRangeSlider(e, Rect(30, 85+70, 300, 15))

.lo_(0.0001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

t.value=""++(slider.hi*10) ++"";

~gAmp = slider.hi*10;

t.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (AMP)

t = SCTextField(

parent: e,

bounds: Rect(350, 180, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

// TEXT (DUR)

u = SCStaticText(e, Rect(350, 220, 150, 20));

u.stringColor_(Color.white);

u.string = "Duration";

u.font_(Font("Arial", 12));

// SLIDER (DUR)

u = SCRangeSlider(e, Rect(30, 225, 300, 15))

.lo_(0.001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.blue))

.action_({ |slider|

slider.lo=0;

u.value=""++(slider.hi*1) ++"s";

~gDur = slider.hi*1;

u.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (DUR)

u = SCTextField(

parent: e,

bounds: Rect(350, 250, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

// TEXT (WAIT)

p = SCStaticText(e, Rect(350, 290, 150, 20));

p.stringColor_(Color.white);

p.string = "Iteration Time";

p.font_(Font("Arial", 12));

// SLIDER (WAIT)

p = SCRangeSlider(e, Rect(30, 295, 300, 15))

.lo_(0.001)

.hi_(1)

.range_(1)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

p.value=""++(0.01/slider.hi*1) ++"s";

~gWait = 0.01/slider.hi*1;

p.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (WAIT)

p = SCTextField(

parent: e,

bounds: Rect(350, 320, 50, 15) // Left Top Width Height

).boxColor_(Color.new255(100,0,50));

)

(

Routine({

inf.do({

~gFreqMenu.switch(0,{Synth("FSinOsc", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur])},

1,{Synth("SinOsc", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur])},

2,{Synth("LFTri", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur])},

3,{Synth("Pulse", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur])},

4,{Synth("Saw", [\gFreq, ~gFreq, \gAmp, ~gAmp, \gDur, ~gDur])}

);

// Create Grain

// Synth("Pulse", [\gFreq, {rrand(80, 300).postln}, \gAmp, 0.05, \gDur, 1.0]);

// [1000, 8000] [200, 400]

// Grain Delta - 0.1, 0.01

~gWait.wait;

})

}).play;

)

[1] Christian Haines. "Creative Computing: Semester 2 - Week 3 - Granular Synthesis". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 14th August 2008

AA3 Week 3 - Foley I

For this week's exercise I have started on the sound of a window opening. I recorded myself in Studio 1 on the chair. I set up a microphone underneath the chair and rocked it back and forth to create a creaking sound. I then added some reverb to give the sound some space. I have added too much reverb at this stage but I didn't spend a lot of time on this sound. It was really just to demonstrate where my ideas are heading.

Click here to hear my sound so far.

These are the rest of the foley elements in the third clip that I will be working with:

- outdoor ambience

- running footsteps (individual & many)

- rocks landing on ground

- laughing

- people screaming

-rocks bouncing off the roof

- vampire shuffling and climbing off roof

- smoke off the roof

- wife knitting

- wife deep breath

- footsteps on grass

- shuffling through bush

- vampire jumping

- wife sleeping

- wife suddenly awakes scared

- man sleeping

- wife clasping window seal

- wife opens window

- big wooden doors opens

- wife stumbles

- wife pleads for help

- man helps wife on bed

[1] Luke Harrald. "Audio Arts: Semester 2 - Week 3 -Foley Analysis I - Nosferatu". Lecture presented at EMU, University of Adelaide, South Australia, 12th August 2008

Thursday, August 07, 2008

Week 2 Forum - My Favourite Things I

[2]

Todays class on Music Technology Forum didn't really include "music technology". Well, we did use a CD player. Two hours of this class were spent on listening to music. When listening to David's piece, my first feelings were sympathy for the performers. I've never seen a piece with so many changing time signatures. I would find it near impossible to play, but I guess that's because I'm not a composition student. The varying tones and dynamic range of the piece sounded good but there wasn't any kind of timing structure. I think David was actually going for this idea.

The Schubert piece we listened to was okay. I prefer Beethoven when it comes to classical music. Schubert's music tends to be a lot more repetitive. David mentioned that this piece reminded him of minimal music. I can definately see why. Minimal music can be very effective but I prefer Philip Glass or minimal electronic music.

I don't think this class was very productive. We hardly did anything. The break was good.

[1] David Harris, "Music Technology Forum: Semester 2 - Week 2 – My Favourite Things". Lecture presented at the Electronic Music Unit, Schulz 1109, University of Adelaide, South Australia, 7th August 2008

[2] Redfin, 'A Few of My Favorite Things (Sort Of): 2 Kinds of Gas, BART and Being a Homeowner'. http://sfbay.redfin.com/blog/category/sweet_digs_classic/ (Accessed 7th August 2008)

AA3 Week 2 - Sound FX Analysis - The Matrix Lobby Scene*

One of the best scenes in The Matrix is this lobby scene. I have analysed this scene and listed the sound design, foley and music. There is so so much going on all together and I haven't included the time at which these events occur as it will take too long. The clip is below so you can follow what I have included.

[2]

Sound Design

pile driver

bag slide

machine beep

jacket opening (swoosh!)

reverse woosh

gun spring sound

lift beep

body fall

swoooosh

hand combat SFX

shells being fired

empty shells dropping to the ground

Foley

footsteps

dialogue

strike

gun fire (machine, shot gun, pistol)

gun cock

gun dropping

army footsteps

smashing pillars

smashed pillars rock fragments dropping

shoe sliding on floor

lift door opening/closing

lift "bing" (which for some reason goes off when they are in the lift?)

Music

pile driver

rhythm element

background tone

propellerheads soundtrack

[1] Luke Harrald. "Audio Arts: Semester 2 - Week 2 - Sound FX Analysis - The Matrix Lobby Scene". Lecture presented at EMU, Audiolab, University of Adelaide, South Australia, 5th August 2008

[2] youtube. "Matrix Lobby Scene". www.youtube.com (Accessed 13/8/8)

CC3 Week 2 - Graphical User Interface II

I was lucky this week as one of my synthdefs was very similar to the example we went through. This is a simple AM synth. I have used the array method but I couldn't work out how to change the colours and make it more customizable. In my code I have used the EZSlider which does limit what you can do quite a bit.

Below is my finished code. For an audio example click here [1.8MB].

//Synth Def

(

SynthDef("SinTone", {

arg cFreq = 500, cAmp = 0.5, mFreq = 3, mAmp = 0.75;

var carrier, modulator, out;

modulator = LFSaw.ar(freq: mFreq, mul: mAmp);

carrier = SinOsc.ar(freq: cFreq, mul: modulator * cAmp);

out = Out.ar(bus: 0, channelsArray: carrier);

}).send(s);

)

~asynth = Synth("SinTone");

(

// Variables

var win, sliderData;

// Build Slider Data

sliderData = [

// Label Min Max InitMin Param

["Carrier Freq", [10.0, 5000.0], 200.0, \cFreq],

["Carrier Amp", [0.0, 1.0], 0.1, \cAmp],

["Mod Freq", [0.1, 200.0], 0.1, \mFreq],

["Mod Amp", [0.1, 100.0], 0.1, \mAmp]

];

// Window Setup

win = GUI.window.new(

name: "Granular Control",

bounds: Rect(20, 400, 440, 180)

).front;

win.view.decorator = FlowLayout(win.view.bounds);

// Build Control consisting of Label, Min NumBox, Range Slider, Max NumBox,

sliderData.do({

// Arguments

arg val, idx;

// Variables

var guiEZ, specGUI;

// Build Slider

guiEZ = EZSlider(

win, // window

400 @ 24, // dimensions

val[0], // label

ControlSpec(val[1][0], val[1][1], \lin), // control spec

{|ez| ~asynth.set(val[3], ez.value);}, // action

val[2] // initial Value

);

guiEZ.labelView.stringColor_(Color.red);

guiEZ.sliderView.focusColor_(Color.red);

guiEZ.numberView.stringColor_(Color.red);

});

)

I did have a go at building a GUI using a lot more detail but I couldn't work out how to change the scaling. Here is the code to have a look if you're interested

[1] Christian Haines. "Creative Computing: Semester 2 - Week 2 - Graphical User Interface II". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 7th August 2008

Saturday, August 02, 2008

Week 1 Forum - Listening Culture

I can’t remember who said it, but I do believe that the main reason why people listen to music whilst doing other things is simply because “we can”. Music is so portable now it wouldn’t really make sense to sit down and do nothing but listen to music. For us in the Elder Conservatorium, it is a different way of thinking. We may do it because we are studying music and we can appreciate just music. I don’t believe that the average person would think like that, especially the younger generation.

Music has changed in a number of ways, but the culture of listening to music has changed because music is more portable and also because it is now associated with visual content. Stephen gave us the example of how in the past a family would sit infront of the radio and listen to see what was on. That still happens except now its called television.

[2]

[1] Stephen Whittington, "Music Technology Forum: Semester 2 - Week 1 – Listening Culture". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 31st July 2008

[2] Google Video, 'SNL iPod'. www.broadcaster.com (Accessed 1st August 2008)

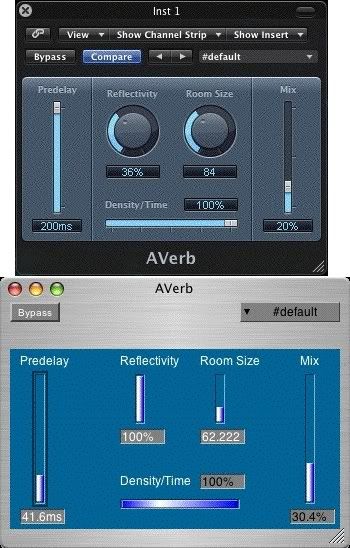

CC3 Week 1 - Graphical User Interface I

My computer died this week which is pretty annoying since I actually did do this weeks SC patch. Hopefully I can get my data back and you'll see my attempt of a reverb plugin in Logic called AVerb.

.........Ok I have my patch. I say it's worth $650. That's what it cost to restore my data from my laptop. Typical Apple. . .

The biggest challenge at first was the positioning. Like Christian said, at first it doesn't make sense. Once I got my first slider working with the text and number box, it was a matter of copying the code and editing the positioning and the value of the slider. I also had to set the slider to start at 0. This was annoying at first because the SCRangeSlider starts where the user clicks, by default.

(

var sbs;

//w.close;

// WHOLE WINDOW

w = SCWindow.new.front;

// Parameters

w.bounds = Rect(170,150,350,250); // Rectangular Window Bounds (Bottom Left Width Height)

w.name_("AVerb"); // Window Name

w.alpha_(0.9); // Transparency

// w.boxColor_(Color.black);

// BLUE PANEL

e = SCCompositeView(w,Rect(10,50,330,180));

e.background = Color.new255(0,100,150);

// Gradient(Color.red,Color.white);

// TEXT (PREDELAY)

d = SCStaticText(e, Rect(20, 50, 100, 20));

d.stringColor_(Color.white);

d.string = "Predelay";

d.font_(Font("Arial", 12));

// BYPASS BUTTON

f = SCButton(w, Rect(10,3,50,20))

.states_([

["Bypass", Color.white, Color.grey],

["Bypass", Color.white, Color.red],

])

.font_(Font("Arial", 10))

.action_({ arg butt;

butt.value.postln;

});

// SLIDER (PREDELAY)

a = SCRangeSlider(e, Rect(35, 75, 10, 130))

.lo_(0.0)

.hi_(1)

.range_(0.01)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

n.value=""++(slider.hi*200) ++"ms";

n.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (PREDELAY)

n = SCTextField(

parent: e,

bounds: Rect(20, 210, 45, 15) // Left Top Width Height

).boxColor_(Color.grey);

// SLIDER (MIX)

b = SCRangeSlider(e, Rect(305, 75, 10, 130))

.lo_(0.0)

.hi_(1)

.range_(0.01)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

m.value=""++(slider.hi*100) ++"%";

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (MIX)

m = SCTextField(

parent: e,

bounds: Rect(290, 210, 45, 15) // Left Top Width Height

).boxColor_(Color.grey);

// TEXT (MIX)

g = SCStaticText(e, Rect(300, 50, 100, 20));

g.stringColor_(Color.white);

g.string = "Mix";

g.font_(Font("Arial", 12));

// SLIDER (DENSITY/TIME)

c = SCRangeSlider(e, Rect(120, 200, 120, 10))

.lo_(0)

.range_(0.1)

// .range_(0.01)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

o.value=""++(slider.hi*100) ++"%";

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (DENSITY/TIME)

o = SCTextField(

parent: e,

bounds: Rect(200, 175, 45, 15) // Left Top Width Height

).boxColor_(Color.grey);

// TEXT (DENSITY/TIME)

p = SCStaticText(e, Rect(120, 170, 500, 20));

p.stringColor_(Color.white);

p.string = "Density/Time";

p.font_(Font("Arial", 12));

// TEXT (REFLECTIVITY)

q = SCStaticText(e, Rect(120, 50, 100, 20));

q.stringColor_(Color.white);

q.string = "Reflectivity";

q.font_(Font("Arial", 12));

// SLIDER (REFLECTIVITY)

q = SCRangeSlider(e, Rect(135, 75, 10, 50))

.lo_(0.0)

.hi_(1)

.range_(0.01)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

q.value=""++(slider.hi*100) ++"%";

q.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (REFLECTIVITY)

q = SCTextField(

parent: e,

bounds: Rect(120, 130, 45, 15) // Left Top Width Height

).boxColor_(Color.grey);

// TEXT (ROOM SIZE)

r = SCStaticText(e, Rect(200, 50, 100, 20));

r.stringColor_(Color.white);

r.string = "Room Size";

r.font_(Font("Arial", 12));

// SLIDER (ROOM SIZE)

r = SCRangeSlider(e, Rect(215, 75, 10, 50))

.lo_(0.0)

.hi_(1)

.range_(0.01)

.knobColor_(HiliteGradient(Color.blue, Color.white, Color.red))

.action_({ |slider|

slider.lo=0;

r.value=""++(slider.hi*200) ++"";

r.stringColor_(Color.white);

[\sliderLOW, slider.lo, \sliderHI, slider.hi].postln;

});

// NUMBER BOX (ROOM SIZE)

r = SCTextField(

parent: e,

bounds: Rect(200, 130, 45, 15) // Left Top Width Height

).boxColor_(Color.grey);

// DROP DOWN MENU (#DEFAULT)

l = [

"#default","-","Next Setting","(Previous Setting","Copy Setting",

"Paste Setting","Reset Setting"

];

sbs = SCPopUpMenu(w,Rect(240,3,100,20));

sbs.items = l;

sbs.background_(Color.grey);

sbs.action = { arg sbs;

};

)

[1] Christian Haines. "Creative Computing: Semester 2 - Week 1 - Graphical User Interface I". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 31st July 2008

AA3 Week 1 - Film Sound & Music Overview

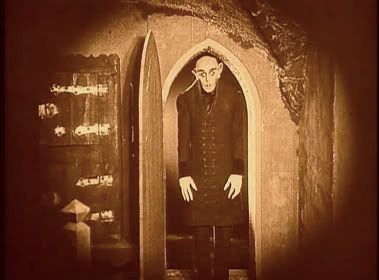

I was pretty disappointed that our film for this semester is some 90 year old film I've never heard of. Still, I guess making sound effects and music will still be fun. I have chosen the first two minutes of the second clip we were provided with. This is because there are a lot more indoor scenes and I think it will be more fun making those indoor eerie sound scapes. This section of the film was pretty freaky and I think for that time it would have been very scary. This film, Nosferatu: A Symphony Of Horror, has some chilling parts and I'm sure with some clever sound design skills I can make it even scarier.

|0.00 - 1.08| - Indoor setting / wood surroundings / possible dialogue / sounds of man flicking through book

|1.08 - 1.52| - clock / door knock / door opening / dracular / door slam / man scared / out door noises

|1.52 - 2.25| - doors opens / man scared hiding / vampire

[2]

I've only looked at the overall sounds very briefly. Later this semester I will go into a lot more depth.

[1] Luke Harrald. "Audio Arts: Semester 2 - Week 1 Film Sound & Music Overview". Lecture presented at EMU, Audiolab, University of Adelaide, South Australia, 29th July 2008

[2] Bram Stoker & Henrik Galeen - Nosferatu: A Symphony of Horror. Dir. Murnau F.W. Videocassette. Germany: Film Arts Guild, 1922. 94 min.

Thursday, June 26, 2008

CC3 Major Project - Sound Project

For my major assignment I have created a piece of varying aesthetic qualities. I have utilised sounds from different synthdefs that I created. These were

SinTone

Klang

BrownNoise

BufferPlay

Reverb

Filter

The piece was sequenced using a series of Pseq streams. I also used Pshuf and Pbind. I varied the carrier and modulating frequencies and amplitudes through out. I also used different timing and varied the number of iterations. Brown noise was also incorporated in a similar fashion. The bell-like sounds, from the Klang uGen, where sequenced at varying pitches and delayed at varying times. All of these controls utilised my initial equation which gave a a value. This was an array of numbers that was substituted into the other uGen parameters.

I have timed the piece using the SystemClock.The final sample manipulation utilised playback speed and some modulation. Aside from SuperCollider being difficult to code, the only problem at the end was clipping in the audio recording. I didn't have time to fix this.

[1]

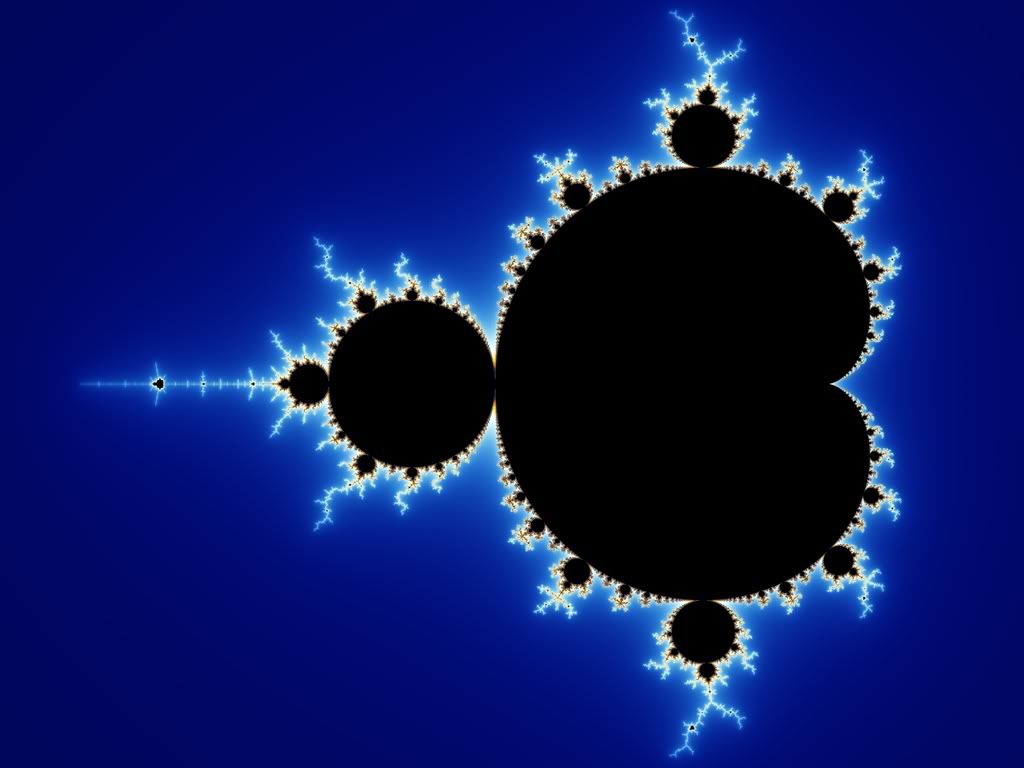

My equation was based on this fractal. This is called the Mandelbrot Set.

I have attached my code, documentation and an audio recording of my piece.

Code [1.4MB]

Documentation [468KB]

Audio 4'24 (low quality) [1.2MB]

Audio 4'24 (high quality) [10.1MB]

[1] Mandelbrot set, wikipedia. 'http://en.wikipedia.org/wiki/Mandelbrot_set'. (Accessed 26/6/8)

Thursday, June 19, 2008

AA3 - Major Assignment - Recording Project

For my major assignment I've recorded two bands. The first is a Jazz ensemble at this university. I organized this band through a friend who's been in the Jazz scene for a while. His name is Reid Jones and he's a club DJ, promoter, sound engineer and music producer.

I was happy with the quality of the musicians, but I didn't have time to do the proper recording that I wanted. At the time I didn't mind so much because I was desperate for an ensemble to record seeing as my original string quartet bailed on me. When I recorded this Jazz ensemble, I only had an hour to set up and record. This was because the trumpet player had to leave and they wanted to all play together. Considering the time restriction, I think I did pretty well. We only did one take.

My second band was a rock band. I met the bass player when I was studying at SAE. I think this band is really talented. I had a fun time recording them, and I look forward to seeing how they go in their career.

Below is the documentation and the songs.

Donna Lee [7.7MB]

I'm Over You [6.6MB]

Bird [7.3MB]

Documentation [648KB]

Friday, May 30, 2008

Week 12 Forum - Scratch II

We were going to have some student presentations today but once again both students didn't show up. That's a bit of a worry really...two weeks in a row?

Anyway Stephen came to the rescue and played us more of that awesome Scratch DVD. After watching the scratching lessons, by Qbert, I decided to put it into practice. Well to be honest I already knew a little about scratching but I'm not a scratch DJ. I am a mix DJ. I have been a DJ for around 5 years and have played in heaps of clubs around Adelaide. I've used everything from vinyl to CD to mixing mp3s. I still prefer old school vinyl.

Mixing and scratching are not easy techniques. I'm glad that we had touched on this topic in forum so people can get the idea out of their heads that DJs "just play music". It's so much more than that. Aside from the skills of mixing there is also the skills a DJ needs to work the crowd. You need to play certain songs at certain times so the crowd will buy drinks. e.g. If you play too many good songs, people will stay on the dance floor and not buy drinks. You need to work the crowd and make them do what you want them to do. I shouldn't give away too many secrets!

As a mix DJ, a common skill is to play two songs together to create a new song. I have done this in my demonstration. As a scratch DJ, there are skills of dropping in vocal stabs and samples to create new sounds. There is a lot of skill involved in DJing and I think the many talentless big heads out in Adelaide have given the rest a bad name.

I have prepared a little movie of my mixing. It's a bit long but you can just fast forward if you're bored. EnjoY!

DJ Reverie in the mix!

my record collection is around 500 so I still have a bit to go before I can compare to DJ Shadow. . .

my record collection is around 500 so I still have a bit to go before I can compare to DJ Shadow. . .a typical Saturday night. . .

[1] Stephen Whittington, "Music Technology Forum: Semester 1 - Week 12 – Scratch". Lecture presented at the Electronic Music Unit, University of Adelaide, South Australia, 5th June 2008